A Preliminary Look at Metaculus and Expert Forecasts

The Metaculus prediction platform began participating in the COVID-19 Expert Surveys on Survey 10. Every week experts and forecasters are polled and the results are sent to the CDC. Of the four surveys that Metaculus has participated in, nine questions have resolved (one of which resolved ambiguously). Four of the unambiguously resolved questions are categorical (binned by probability) and the other four are triplet (median and confidence interval). Categorical questions are scored using a brier score in which a lower score represents a better prediction. Triplet questions are scored using the error of the median of the prediction.

A Preliminary Look at Metaculus and Expert Forecasts

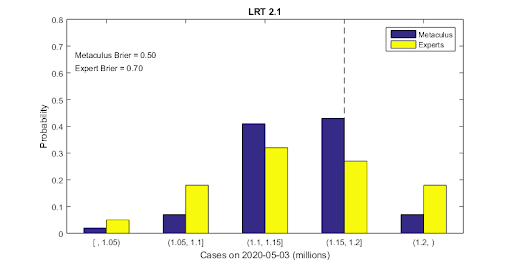

Two categorical questions have resolved unambiguously since Metaculus began participating in the COVID-19 expert surveys. The first to resolve was Survey 11, Question 1 (LRT 2.1 on Metaculus). The question asked how many confirmed cases the US would have on May 3, 2020 and resolved to 1,152,006 confirmed cases. Experts gave the correct result a probability of 27% and earned a brier score of 0.70. Metaculus gave the correct result a probability of 43% and earned a brier score of 0.50. The figure below shows the expert and Metaculus predictions. The dashed line represents the resolution value.

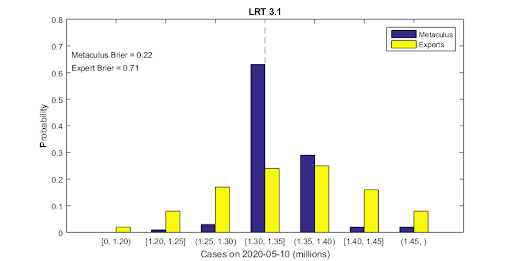

The second categorical question to resolve was Survey 12, Question 1 (LRT 3.1 on Metaculus). The question asked how many confirmed cases the US would have on May 10, 2020 and resolved to 1,322,807 confirmed cases. Experts gave the correct result a probability of 24% and earned a brier score of 0.71. Metaculus gave the correct result a probability of 63% and earned a brier score of 0.22.

On average,expert predictions resulted in a brier score of 0.66. Metaculus predictions resulted in an average brier score of 0.30.

Triplet Question Results

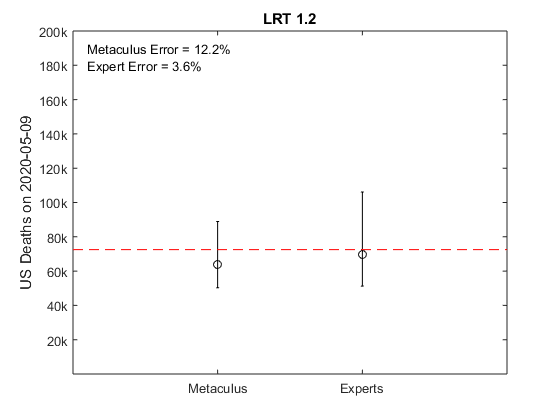

Three triplet questions have resolved since Metaculus began participating in the COVID-19 expert surveys. The first to resolve was from Survey 10 (LRT 1.2 on Metaculus). The question asked how many COVID-19 deaths the US would have on May 9, 2020 and resolved to 72,527. The median of expert predictions was 69,945 resulting in an error of 3.6%. The median of Metaculus predictions was 63,700 resulting in an error of 12.2%. The figure below shows the expert and Metaculus predictions. Prediction bars represent a 90% confidence interval, circles represent the median prediction, and the dashed line represents the resolution value.

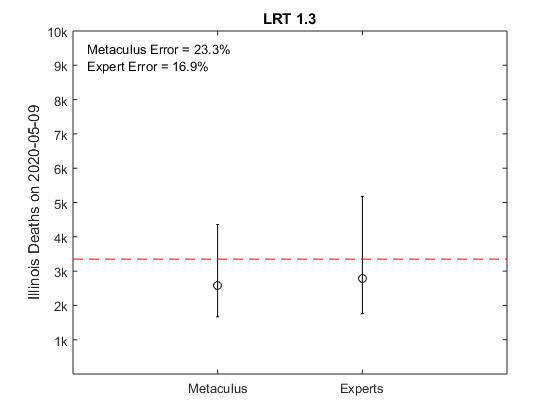

The second triplet to resolve was also from Survey 10 (LRT 1.3 on Metaculus). The question asked how many COVID-19 deaths Illinois would have on May 9, 2020 and resolved to 3,349. The median of expert predictions was 2,784 resulting in an error of 16.9%. The median of Metaculus predictions was 2,570 resulting in an error of 23.3%.

The third triplet to resolve was also from Survey 10 (LRT 1.4 on Metaculus). The question asked how many COVID-19 deaths Louisiana would have on May 9, 2020 and resolved to 2,267. The median of expert predictions was 2,814 resulting in an error of 24.1%. The median of Metaculus predictions was 2,200 resulting in an error of 3.0%

The fourth triplet to resolve was also from Survey 11 (LRT 2.4 on Metaculus). The question asked what the average number of new daily confirmed cases would be in the state of Georgia for the week of May 9, 2020 and resolved to 659. The median of expert predictions was 1,044 resulting in an error of 58.4%. The median of Metaculus predictions was 702 resulting in an error of 6.5%

On average, the error of the median of expert predictions is 25.7%. The error of the median of Metaculus predictions averages to 11.2% (see Table 2). Another interesting aspect of the predictions is the size of the confidence intervals. On average, Metaculus confidence intervals are 27% tighter than expert’s.

Ambiguous Resolutions

So far, one question was resolved as ambiguous on the Metaculus platform. This question was Survey 10, Question 1 (LRT 1.1 on Metaculus). The question asked how many confirmed cases the US would have on April 26, 2020. The resolution criteria stated, “Resolution will be to the number of cases reported on the COVID tracker spreadsheet (on the US Daily 4pm ET tab) on April 26th at 16:00 ET.” The intention of this question was to resolve to the US Daily 4pm cases for April 26 when the page was updated for that day. However, the standard for Metaculus is to take the resolution criteria literally, which would result in a resolution to the most recent data at 16:00 ET (data from April 25). As indicated in the discussion prior to the question closing, this discrepancy caused confusion amongst the Metaculus community. Some were predicting that the spreadsheet would not be updated at 16:00 and were thus predicting the number of cases on April 25th. Others were predicting the number of cases on April 26th (in the spirit of the question). The results are shown in the figure below.

Once you submit your essay, you can no longer edit it.